You create a batch inference pipeline by using the Azure ML SDK.

You run the pipeline by using the following code:

from azureml.pipeline.core import Pipeline

from azureml.core.experiment import Experiment

pipeline = Pipeline(workspace=ws, steps=[parallelrun_step])

pipeline_run = Experiment(ws, ‘batch_pipeline’).submit(pipeline)

You need to monitor the progress of the pipeline execution.

What are two possible ways to achieve this goal? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

A . Run the following code in a notebook:

B . Use the Inference Clusters tab in Machine Learning Studio.

C . Use the Activity log in the Azure portal for the Machine Learning workspace.

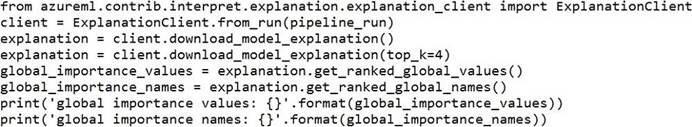

D . Run the following code in a notebook:

![]()

E . Run the following code and monitor the console output from the PipelineRun object:

![]()

Answer: DE

Explanation:

A batch inference job can take a long time to finish. This example monitors progress by using a Jupyter widget.

You can also manage the job’s progress by using:

– Azure Machine Learning Studio.

– Console output from the PipelineRun object.

from azureml.widgets import RunDetails

RunDetails(pipeline_run).show()

pipeline_run.wait_for_completion(show_output=True)

Reference: https://docs.microsoft.com/en-us/azure/machine-learning/how-to-use-parallel-run-step#monitor-the-parallel-run-job

Leave a Reply