HOTSPOT

You train a classification model by using a decision tree algorithm.

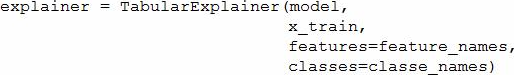

You create an estimator by running the following Python code. The variable feature_names is a list of all feature names, and class_names is a list of all class names.

from interpret.ext.blackbox import TabularExplainer

You need to explain the predictions made by the model for all classes by determining the importance of all features.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Answer:

Explanation:

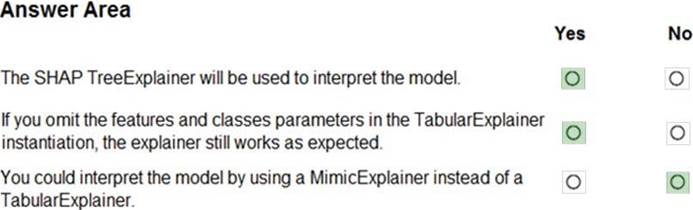

Box 1: Yes

TabularExplainer calls one of the three SHAP explainers underneath (TreeExplainer, DeepExplainer, or KernelExplainer).

Box 2: Yes

To make your explanations and visualizations more informative, you can choose to pass in feature names and output class names if doing classification.

Box 3: No

TabularExplainer automatically selects the most appropriate one for your use case, but you can call each of its three underlying explainers underneath (TreeExplainer, DeepExplainer, or KernelExplainer) directly.

Reference: https://docs.microsoft.com/en-us/azure/machine-learning/how-to-machine-learning-interpretability-aml